Optimización Bayesiana

Optimización Bayesiana

Este va a ser un tutorial muy rápido para utilizar Optuna, una librería de optimización Bayesiana y Weights & Biases. El cuál espero pueda llegar a ser mi plataforma para almacenar logs de entrenamientos de modelos.

La verdad he probado otras plataformas como MlFlow (puedes ver un tutorial acá), el cuál fue extremadamente difícil de aprender y sumamente poco intuitivo. Si bien no creo que sea una mala herramienta la verdad no me terminó de convencer. También vi Neptune, pero la verdad es que es una librería relativamente nueva con poquitas estrellas en github.

Por otro lado Weights and Biases se está volviendo la herramienta más utilizada en investigación y la verdad es que es bastante más sencilla que el resto, pero tiene una ligera curva de aprendizaje. En mi opinión, la plataforma es intuitiva, pero siento que debiera de haber un mayor esfuerzo en la documentación.

Para probar esto rápidamente veremos si es posible resolver un proceso de optimización.

Supongamos el siguiente ejemplo: \[y = x^2 + 1\]

Si queremos encontrar el mínimo de esta función es muy sencillo, basta con derivar e igualar a cero:

\(y' = 2x = 0 \rightarrow x = 0\) \(y = 2 \cdot 0 + 1 = 1\)

Luego el mínimo de esta parabola se encuentra en la coordenada (0,1).

Usando Optuna

Optuna es una librería que está pensada para procesos de optimización en general, pero es normalmente utilizada para resolver problemas de Búsqueda de Hiperparámetros. Es decir, se buscan los hiperparámetros que permitan encontrar la métrica óptima, la cual puede ser la máxima (Accuracy o R²) o la mínima (Logloss o MSE).

Por lo tanto uno debe de entregar un rango para probar los hiperparámetros y el número de muestras a sacar de ahí. Dado que el proceso es de optimización Bayesiana, utilizará los resultados anteriores para acercarse de mejor manera al óptimo real sin la necesidad de hacer un GridSearch (revisar todas y cada una de las combinaciones posibles).

Dado que este es un proceso aleatorio, es importante destacar que podria ocurrir que el proceso no converja, por lo que es necesario dar un número de ensayos apropiado para que el proceso funcione como se espera.

import wandb

import optuna

Lo primero es crearse una cuenta en wandb.ai/login. Mi recomendación es utilizar tu cuenta de github ya que permitirá linkear de mejor manera el código de tus repos con la plataforma.

wandb.login()

[34m[1mwandb[0m: Currently logged in as: [33mdatacuber[0m (use `wandb login --relogin` to force relogin)

True

wandb.login() permitirá loguearse a la plataforma. La primera vez te solicitará el ingreso de tu contraseña pero de ahí en adelante quedará almacenado en tu sistema. Me gustó mucho el sistema ya que no tuve que configurar nada. Otras plataformas como neptune te piden almacenar tú el TOKEN de autenticación dentro de una variable de entorno y en OS como Windows puede ser más complicado.

Optimizando en Optuna

Vamos a definir un rango en el que sospechamos que está el óptimo que nos interesa, en este caso el mínimo global. Se define como un diccionario de la siguiente manera:

rango = {'min': -10,

'max': 10}

Luego se define una función, la cual puede llamarse como queramos. Esta función debe tener como parámetro trial, que es un ensayo. La funcion debe escoger un valor del rango dado, en este caso mediante trial.suggest_uniform() y se evaluará en la expresión a optimizar, la cual va en el return.

Existen diversas maneras de muestrar valores, dependiendo si del tipo de variable o de la manera que queramos hacerlo. Para más información ir acá.

def optimize_squared(trial):

x = trial.suggest_uniform('x', rango['min'], rango['max'])

return x** 2 + 1 # nuestra función a optimizar

Finalmente para ejecutar la optimización ejecutaremos un estudio, en el cual queremos minimizar. Elegimos la funcion y el número de ensatos y listo.

study = optuna.create_study(direction = 'minimize')

study.optimize(optimize_squared, n_trials=50)

[32m[I 2021-06-28 23:09:18,931][0m A new study created in memory with name: no-name-8cdd525d-2017-462c-b030-427b6c38ec32[0m

[32m[I 2021-06-28 23:09:18,937][0m Trial 0 finished with value: 23.902017490831042 and parameters: {'x': -4.785605237671724}. Best is trial 0 with value: 23.902017490831042.[0m

[32m[I 2021-06-28 23:09:18,941][0m Trial 1 finished with value: 2.597698699418838 and parameters: {'x': -1.2640010678076337}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,943][0m Trial 2 finished with value: 31.022405959147648 and parameters: {'x': -5.479270568163946}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,946][0m Trial 3 finished with value: 67.73334832123625 and parameters: {'x': 8.16904818943041}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,948][0m Trial 4 finished with value: 24.48057869420729 and parameters: {'x': 4.8456762886316795}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,950][0m Trial 5 finished with value: 21.09260385213723 and parameters: {'x': 4.4824774234944265}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,952][0m Trial 6 finished with value: 68.65242786697495 and parameters: {'x': -8.225109596046423}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,954][0m Trial 7 finished with value: 41.22835487370143 and parameters: {'x': -6.342582665894188}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,955][0m Trial 8 finished with value: 39.87219088922785 and parameters: {'x': 6.234756682439809}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,956][0m Trial 9 finished with value: 4.320442596901529 and parameters: {'x': -1.8222081650847493}. Best is trial 1 with value: 2.597698699418838.[0m

[32m[I 2021-06-28 23:09:18,961][0m Trial 10 finished with value: 1.5979800173633043 and parameters: {'x': 0.7732916767709996}. Best is trial 10 with value: 1.5979800173633043.[0m

[32m[I 2021-06-28 23:09:18,965][0m Trial 11 finished with value: 1.0345315007217994 and parameters: {'x': 0.18582653395519022}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,968][0m Trial 12 finished with value: 7.060819899863781 and parameters: {'x': 2.461873250162116}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,971][0m Trial 13 finished with value: 3.9300176669297717 and parameters: {'x': 1.711729437419878}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,975][0m Trial 14 finished with value: 1.0468883547570575 and parameters: {'x': 0.21653719024005427}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,978][0m Trial 15 finished with value: 8.572676590204937 and parameters: {'x': -2.7518496670793877}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,981][0m Trial 16 finished with value: 93.63719717806818 and parameters: {'x': 9.624821929680994}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,985][0m Trial 17 finished with value: 10.927202880804595 and parameters: {'x': -3.150746400585835}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,988][0m Trial 18 finished with value: 1.0628486643725503 and parameters: {'x': 0.2506963589136273}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,992][0m Trial 19 finished with value: 11.672926127784672 and parameters: {'x': 3.266944463529289}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,996][0m Trial 20 finished with value: 96.53703677659003 and parameters: {'x': -9.77430492549675}. Best is trial 11 with value: 1.0345315007217994.[0m

[32m[I 2021-06-28 23:09:18,999][0m Trial 21 finished with value: 1.0073560041574146 and parameters: {'x': 0.08576715080620578}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,002][0m Trial 22 finished with value: 1.5569047360360124 and parameters: {'x': -0.7462605014577767}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,005][0m Trial 23 finished with value: 1.6162128738110146 and parameters: {'x': 0.7849922762747508}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,009][0m Trial 24 finished with value: 15.627953973496544 and parameters: {'x': -3.824650830271509}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,012][0m Trial 25 finished with value: 12.001754025332767 and parameters: {'x': 3.3168892090832287}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,015][0m Trial 26 finished with value: 1.1010945372091034 and parameters: {'x': -0.31795367148234577}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,019][0m Trial 27 finished with value: 5.690980650893347 and parameters: {'x': -2.165867182191315}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,022][0m Trial 28 finished with value: 3.881763808043724 and parameters: {'x': 1.6975758622352415}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,026][0m Trial 29 finished with value: 22.704288239315446 and parameters: {'x': -4.658786133674248}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,030][0m Trial 30 finished with value: 34.57070775311028 and parameters: {'x': 5.794023451204722}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,034][0m Trial 31 finished with value: 1.786438806863386 and parameters: {'x': 0.8868138513032969}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,038][0m Trial 32 finished with value: 1.13515549875073 and parameters: {'x': -0.36763500751523914}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,042][0m Trial 33 finished with value: 3.034146749479723 and parameters: {'x': -1.4262351662610633}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,046][0m Trial 34 finished with value: 8.00690795051327 and parameters: {'x': 2.647056469082832}. Best is trial 21 with value: 1.0073560041574146.[0m

[32m[I 2021-06-28 23:09:19,049][0m Trial 35 finished with value: 1.0056068731049788 and parameters: {'x': 0.07487905651768616}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,053][0m Trial 36 finished with value: 18.948200564457768 and parameters: {'x': 4.236531666877727}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,057][0m Trial 37 finished with value: 3.0220522532879452 and parameters: {'x': -1.4219888372585578}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,061][0m Trial 38 finished with value: 41.17041626306449 and parameters: {'x': -6.338013589687583}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,064][0m Trial 39 finished with value: 3.9786140127217138 and parameters: {'x': 1.725866163038639}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,068][0m Trial 40 finished with value: 19.75443303437414 and parameters: {'x': -4.330638871387701}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,072][0m Trial 41 finished with value: 1.067267840439896 and parameters: {'x': 0.25936044501792493}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,076][0m Trial 42 finished with value: 1.8919174991521621 and parameters: {'x': -0.9444138389245269}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,080][0m Trial 43 finished with value: 1.0853379277082995 and parameters: {'x': 0.2921265611140137}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,084][0m Trial 44 finished with value: 5.7274003922895185 and parameters: {'x': -2.1742585845040416}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,088][0m Trial 45 finished with value: 2.7663831230225453 and parameters: {'x': 1.3290534688350748}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,092][0m Trial 46 finished with value: 9.336070192939113 and parameters: {'x': -2.8872253450222956}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,096][0m Trial 47 finished with value: 7.29747062572058 and parameters: {'x': 2.5094761656012157}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,100][0m Trial 48 finished with value: 15.399524503716533 and parameters: {'x': 3.7946705395483984}. Best is trial 35 with value: 1.0056068731049788.[0m

[32m[I 2021-06-28 23:09:19,104][0m Trial 49 finished with value: 1.000010000625206 and parameters: {'x': 0.003162376512363424}. Best is trial 49 with value: 1.000010000625206.[0m

Para chequear los resultados podemos hacer lo siguiente:

print('x óptimo: ', study.best_params)

print('y óptimo', study.best_value)

x óptimo: {'x': 0.003162376512363424}

y óptimo 1.000010000625206

También podemos sacar un dataframe en pandas con todos los ensayos realizados y analizarlo a nuestro gusto.

study.trials_dataframe()

| number | value | datetime_start | datetime_complete | duration | params_x | state | |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 23.902017 | 2021-06-28 23:09:18.935332 | 2021-06-28 23:09:18.936417 | 0 days 00:00:00.001085 | -4.785605 | COMPLETE |

| 1 | 1 | 2.597699 | 2021-06-28 23:09:18.939868 | 2021-06-28 23:09:18.940357 | 0 days 00:00:00.000489 | -1.264001 | COMPLETE |

| 2 | 2 | 31.022406 | 2021-06-28 23:09:18.942424 | 2021-06-28 23:09:18.942869 | 0 days 00:00:00.000445 | -5.479271 | COMPLETE |

| 3 | 3 | 67.733348 | 2021-06-28 23:09:18.944934 | 2021-06-28 23:09:18.945429 | 0 days 00:00:00.000495 | 8.169048 | COMPLETE |

| 4 | 4 | 24.480579 | 2021-06-28 23:09:18.947517 | 2021-06-28 23:09:18.947971 | 0 days 00:00:00.000454 | 4.845676 | COMPLETE |

| 5 | 5 | 21.092604 | 2021-06-28 23:09:18.949708 | 2021-06-28 23:09:18.950099 | 0 days 00:00:00.000391 | 4.482477 | COMPLETE |

| 6 | 6 | 68.652428 | 2021-06-28 23:09:18.951713 | 2021-06-28 23:09:18.952076 | 0 days 00:00:00.000363 | -8.225110 | COMPLETE |

| 7 | 7 | 41.228355 | 2021-06-28 23:09:18.953625 | 2021-06-28 23:09:18.954000 | 0 days 00:00:00.000375 | -6.342583 | COMPLETE |

| 8 | 8 | 39.872191 | 2021-06-28 23:09:18.955054 | 2021-06-28 23:09:18.955310 | 0 days 00:00:00.000256 | 6.234757 | COMPLETE |

| 9 | 9 | 4.320443 | 2021-06-28 23:09:18.956372 | 2021-06-28 23:09:18.956664 | 0 days 00:00:00.000292 | -1.822208 | COMPLETE |

| 10 | 10 | 1.597980 | 2021-06-28 23:09:18.957751 | 2021-06-28 23:09:18.961695 | 0 days 00:00:00.003944 | 0.773292 | COMPLETE |

| 11 | 11 | 1.034532 | 2021-06-28 23:09:18.962870 | 2021-06-28 23:09:18.965450 | 0 days 00:00:00.002580 | 0.185827 | COMPLETE |

| 12 | 12 | 7.060820 | 2021-06-28 23:09:18.965972 | 2021-06-28 23:09:18.968642 | 0 days 00:00:00.002670 | 2.461873 | COMPLETE |

| 13 | 13 | 3.930018 | 2021-06-28 23:09:18.969124 | 2021-06-28 23:09:18.971723 | 0 days 00:00:00.002599 | 1.711729 | COMPLETE |

| 14 | 14 | 1.046888 | 2021-06-28 23:09:18.972269 | 2021-06-28 23:09:18.975136 | 0 days 00:00:00.002867 | 0.216537 | COMPLETE |

| 15 | 15 | 8.572677 | 2021-06-28 23:09:18.975670 | 2021-06-28 23:09:18.978126 | 0 days 00:00:00.002456 | -2.751850 | COMPLETE |

| 16 | 16 | 93.637197 | 2021-06-28 23:09:18.978572 | 2021-06-28 23:09:18.981806 | 0 days 00:00:00.003234 | 9.624822 | COMPLETE |

| 17 | 17 | 10.927203 | 2021-06-28 23:09:18.982243 | 2021-06-28 23:09:18.985387 | 0 days 00:00:00.003144 | -3.150746 | COMPLETE |

| 18 | 18 | 1.062849 | 2021-06-28 23:09:18.986136 | 2021-06-28 23:09:18.988484 | 0 days 00:00:00.002348 | 0.250696 | COMPLETE |

| 19 | 19 | 11.672926 | 2021-06-28 23:09:18.988975 | 2021-06-28 23:09:18.992569 | 0 days 00:00:00.003594 | 3.266944 | COMPLETE |

| 20 | 20 | 96.537037 | 2021-06-28 23:09:18.993133 | 2021-06-28 23:09:18.995906 | 0 days 00:00:00.002773 | -9.774305 | COMPLETE |

| 21 | 21 | 1.007356 | 2021-06-28 23:09:18.996279 | 2021-06-28 23:09:18.999083 | 0 days 00:00:00.002804 | 0.085767 | COMPLETE |

| 22 | 22 | 1.556905 | 2021-06-28 23:09:18.999442 | 2021-06-28 23:09:19.002391 | 0 days 00:00:00.002949 | -0.746261 | COMPLETE |

| 23 | 23 | 1.616213 | 2021-06-28 23:09:19.002844 | 2021-06-28 23:09:19.005757 | 0 days 00:00:00.002913 | 0.784992 | COMPLETE |

| 24 | 24 | 15.627954 | 2021-06-28 23:09:19.006150 | 2021-06-28 23:09:19.009011 | 0 days 00:00:00.002861 | -3.824651 | COMPLETE |

| 25 | 25 | 12.001754 | 2021-06-28 23:09:19.009392 | 2021-06-28 23:09:19.012153 | 0 days 00:00:00.002761 | 3.316889 | COMPLETE |

| 26 | 26 | 1.101095 | 2021-06-28 23:09:19.012580 | 2021-06-28 23:09:19.015344 | 0 days 00:00:00.002764 | -0.317954 | COMPLETE |

| 27 | 27 | 5.690981 | 2021-06-28 23:09:19.015695 | 2021-06-28 23:09:19.018904 | 0 days 00:00:00.003209 | -2.165867 | COMPLETE |

| 28 | 28 | 3.881764 | 2021-06-28 23:09:19.019647 | 2021-06-28 23:09:19.022072 | 0 days 00:00:00.002425 | 1.697576 | COMPLETE |

| 29 | 29 | 22.704288 | 2021-06-28 23:09:19.022680 | 2021-06-28 23:09:19.026501 | 0 days 00:00:00.003821 | -4.658786 | COMPLETE |

| 30 | 30 | 34.570708 | 2021-06-28 23:09:19.027023 | 2021-06-28 23:09:19.030706 | 0 days 00:00:00.003683 | 5.794023 | COMPLETE |

| 31 | 31 | 1.786439 | 2021-06-28 23:09:19.031141 | 2021-06-28 23:09:19.034242 | 0 days 00:00:00.003101 | 0.886814 | COMPLETE |

| 32 | 32 | 1.135155 | 2021-06-28 23:09:19.034923 | 2021-06-28 23:09:19.038423 | 0 days 00:00:00.003500 | -0.367635 | COMPLETE |

| 33 | 33 | 3.034147 | 2021-06-28 23:09:19.038979 | 2021-06-28 23:09:19.042155 | 0 days 00:00:00.003176 | -1.426235 | COMPLETE |

| 34 | 34 | 8.006908 | 2021-06-28 23:09:19.042866 | 2021-06-28 23:09:19.045919 | 0 days 00:00:00.003053 | 2.647056 | COMPLETE |

| 35 | 35 | 1.005607 | 2021-06-28 23:09:19.046355 | 2021-06-28 23:09:19.049460 | 0 days 00:00:00.003105 | 0.074879 | COMPLETE |

| 36 | 36 | 18.948201 | 2021-06-28 23:09:19.049848 | 2021-06-28 23:09:19.053248 | 0 days 00:00:00.003400 | 4.236532 | COMPLETE |

| 37 | 37 | 3.022052 | 2021-06-28 23:09:19.053992 | 2021-06-28 23:09:19.057380 | 0 days 00:00:00.003388 | -1.421989 | COMPLETE |

| 38 | 38 | 41.170416 | 2021-06-28 23:09:19.057840 | 2021-06-28 23:09:19.060986 | 0 days 00:00:00.003146 | -6.338014 | COMPLETE |

| 39 | 39 | 3.978614 | 2021-06-28 23:09:19.061569 | 2021-06-28 23:09:19.064579 | 0 days 00:00:00.003010 | 1.725866 | COMPLETE |

| 40 | 40 | 19.754433 | 2021-06-28 23:09:19.065140 | 2021-06-28 23:09:19.068616 | 0 days 00:00:00.003476 | -4.330639 | COMPLETE |

| 41 | 41 | 1.067268 | 2021-06-28 23:09:19.069304 | 2021-06-28 23:09:19.072759 | 0 days 00:00:00.003455 | 0.259360 | COMPLETE |

| 42 | 42 | 1.891917 | 2021-06-28 23:09:19.073384 | 2021-06-28 23:09:19.076815 | 0 days 00:00:00.003431 | -0.944414 | COMPLETE |

| 43 | 43 | 1.085338 | 2021-06-28 23:09:19.077278 | 2021-06-28 23:09:19.080316 | 0 days 00:00:00.003038 | 0.292127 | COMPLETE |

| 44 | 44 | 5.727400 | 2021-06-28 23:09:19.080888 | 2021-06-28 23:09:19.084064 | 0 days 00:00:00.003176 | -2.174259 | COMPLETE |

| 45 | 45 | 2.766383 | 2021-06-28 23:09:19.084748 | 2021-06-28 23:09:19.088206 | 0 days 00:00:00.003458 | 1.329053 | COMPLETE |

| 46 | 46 | 9.336070 | 2021-06-28 23:09:19.088738 | 2021-06-28 23:09:19.092169 | 0 days 00:00:00.003431 | -2.887225 | COMPLETE |

| 47 | 47 | 7.297471 | 2021-06-28 23:09:19.092772 | 2021-06-28 23:09:19.096148 | 0 days 00:00:00.003376 | 2.509476 | COMPLETE |

| 48 | 48 | 15.399525 | 2021-06-28 23:09:19.096937 | 2021-06-28 23:09:19.100724 | 0 days 00:00:00.003787 | 3.794671 | COMPLETE |

| 49 | 49 | 1.000010 | 2021-06-28 23:09:19.101393 | 2021-06-28 23:09:19.104337 | 0 days 00:00:00.002944 | 0.003162 | COMPLETE |

Los resultados son los esperados, x debe ser cercano a 0 e y cercano a 1.

Guardando los resultados en wandb

Para almacenar los resultados en Weights & Biases podemos hacer wandb.init() y agregaremos el nombre de un proyecto. Además podemos agregar algunos tags para identificar el run que haremos.

Un run sería equivalente a un modelo en el que se probarán distintos hiperparámetros. Cada uno de los ensayos serán combinaciones distintas de hiperparámetros y se irán almacenando mediante run.log(). En nuestro caso almacenaremos step como el número del experimento y trial.params almacenará todos los parámetros que están siendo optimizados, en nuestro caso x. Luego podemos mediante trial.value almacenar el resultado de nuestro valor objetivo.

Finalmente mediante run.summary podemos almacenar lo que nosotros queramos. En mi caso me gusta almacenar el mejor y y los parámetros óptimos.

with wandb.init(project="nuevo-proyecto",

tags = ['optimización','cuadrática']) as run:

for step, trial in enumerate(study.trials):

run.log(trial.params, step = step)

run.log({"y": trial.value})

run.summary['best_y'] = study.best_value

run.summary['best_params'] = study.best_params

Tracking run with wandb version 0.10.32

Syncing run dark-sky-3 to Weights & Biases (Documentation).

Project page: https://wandb.ai/datacuber/nuevo-proyecto

Run page: https://wandb.ai/datacuber/nuevo-proyecto/runs/29ttsi72

Run data is saved locally in /home/alfonso/Documents/kaggle/titanic/wandb/run-20210628_230927-29ttsi72

Waiting for W&B process to finish, PID 442316

Program ended successfully.

VBox(children=(Label(value=' 0.00MB of 0.00MB uploaded (0.00MB deduped)\r'), FloatProgress(value=0.0, max=1.0)…

Find user logs for this run at: /home/alfonso/Documents/kaggle/titanic/wandb/run-20210628_230927-29ttsi72/logs/debug.log

Find internal logs for this run at: /home/alfonso/Documents/kaggle/titanic/wandb/run-20210628_230927-29ttsi72/logs/debug-internal.log

Run summary:

<table class="wandb">

</table>

Run history:

<table class="wandb">

</table>

Synced 5 W&B file(s), 0 media file(s), 0 artifact file(s) and 0 other file(s)

Synced dark-sky-3: https://wandb.ai/datacuber/nuevo-proyecto/runs/29ttsi72

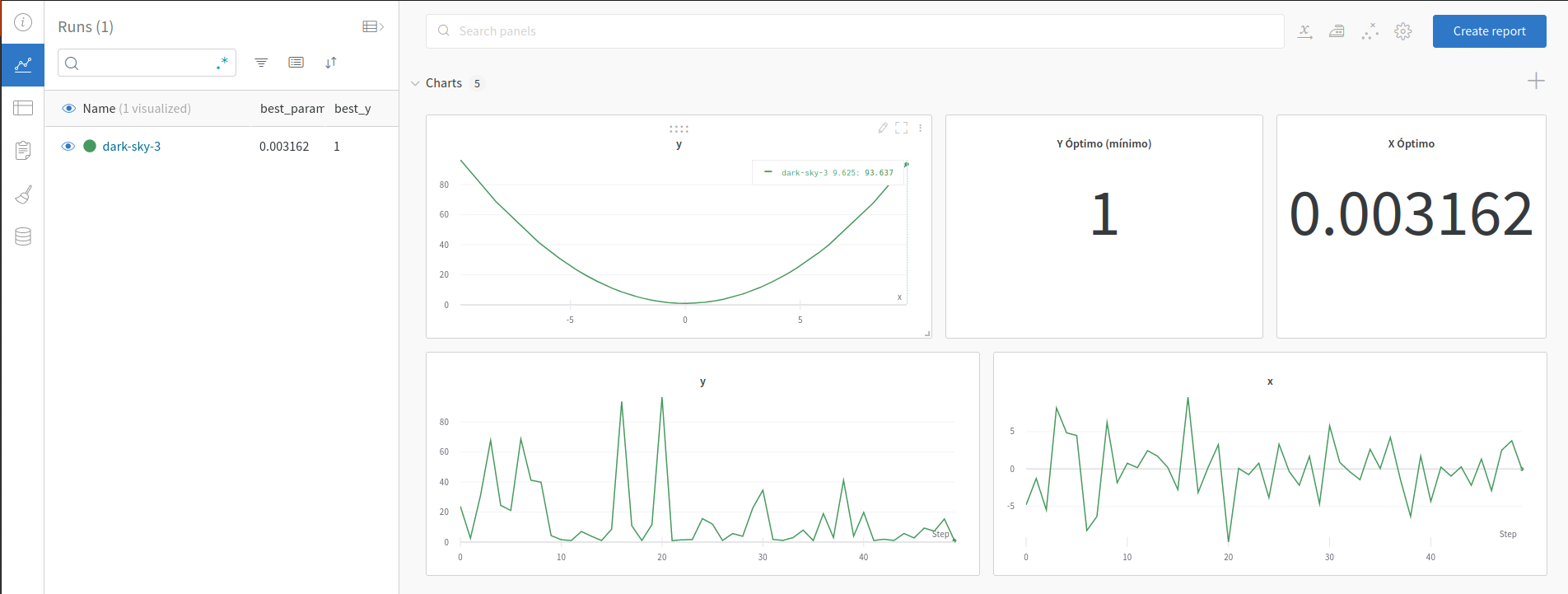

Los resultados pueden ser visualizados en el portal de wandb mediante el link entregado. En el portal uno puede agregar todos los gráficos que requiera. La idea es poder visualizar el proyecto de la mejor manera posible:

En este rápido tutorial se puede ver cómo utilizar Weights & Biases de manera muy rápida. Espero poder realizar otro en el que se puedan ver más beneficios de utilizar esta herramienta pero enfocado de lleno en su uso con modelos de Machine Learning.

api = wandb.Api()

# run is specified by <entity>/<project>/<run id>

run = api.run("datacuber/optuna/2k1vy0cj")

metrics_df = run.history()

metrics_df

| _step | x | _runtime | mse | z | _timestamp | |

|---|---|---|---|---|---|---|

| 0 | 0 | -6.377177 | 2 | 85.680586 | 8.816779 | 1613775185 |

| 1 | 1 | -0.069629 | 2 | 169.460192 | 7.543658 | 1613775185 |

| 2 | 2 | -4.119354 | 2 | 315.556214 | -5.822274 | 1613775185 |

| 3 | 3 | -9.321491 | 2 | 14.458745 | 3.759513 | 1613775185 |

| 4 | 4 | -4.059086 | 2 | 10.451453 | 1.413108 | 1613775185 |

| ... | ... | ... | ... | ... | ... | ... |

| 95 | 95 | 4.880971 | 2 | 0.139162 | -1.627007 | 1613775185 |

| 96 | 96 | 4.535738 | 2 | 13.287564 | -3.090475 | 1613775185 |

| 97 | 97 | 5.190690 | 2 | 3.631573 | -2.548179 | 1613775185 |

| 98 | 98 | 5.976324 | 2 | 10.780678 | -3.629859 | 1613775185 |

| 99 | 99 | 4.888123 | 2 | 0.010747 | -1.495896 | 1613775185 |

100 rows × 6 columns

system_metrics = run.history(stream = 'events')

system_metrics

| system.network.sent | system.network.recv | system.disk | _wandb | system.gpu.0.temp | system.gpu.0.memory | system.gpu.0.gpu | _runtime | system.proc.memory.rssMB | system.proc.memory.availableMB | system.cpu | system.proc.cpu.threads | system.memory | system.proc.memory.percent | system.gpu.0.memoryAllocated | _timestamp | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 27276 | 36513 | 28.5 | True | 46 | 1 | 1 | 3 | 301.94 | 28374.51 | 9.05 | 23.5 | 11.4 | 0.94 | 4.27 | 1613775186 |

run.summary

{'z': -1.4958962538521758, 'mse': 0.010747402305021076, 'best': {'x': 4.888122828892169, 'z': -1.4958962538521758}, '_step': 99, '_runtime': 2, '_timestamp': 1613775185, 'x': 4.888122828892169}