¿Cómo implementar un clasificador simple de imágenes?

Clasificando Imágenes

Hoy en día se piensa que las redes neuronales son la cúspide de la complejidad en el Machine Learning. Sí, es verdad, todos los días salen investigaciones nuevas que demuestran la capacidad que estos sistemas tienen, pero lo que uno a veces no sabe es que nuestros computadores tienen capacidad suficiente para resolver algunos de estos problemas.

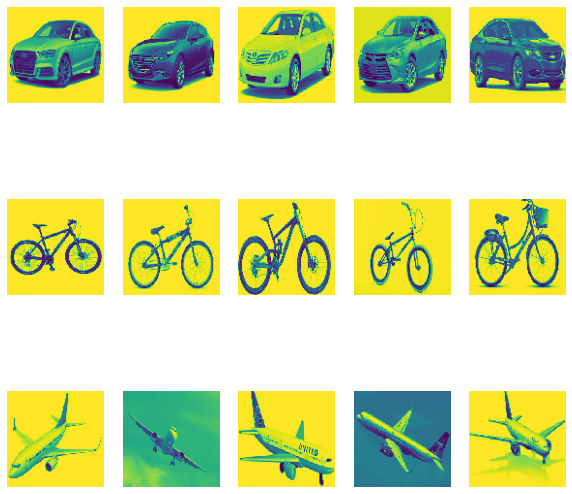

La idea de este tutorial es implementar una red convolucional simple que permita clasificar imágenes utilizando Keras, en este caso, se tienen imágenes de bicicletas, autos y aviones. Para ello se utilizarán las siguientes imágenes, las cuales se pueden descargar de aquí:

Rara vez todas las imágenes tendrán las mismas características. En este caso las imágenes son de distintos tamaños, colores, y además están en distintas direcciones. Es por eso que el preprocesamiento debe considerar escalar todas las imágenes a un tamaño común que permita suficiente capacidad de clasificación pero también que permita caber en memoria.

from tensorflow.keras.preprocessing.image import load_img, img_to_array, array_to_img

from tensorflow.image import resize

import glob

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(1)

Datos de Entrenamiento

c_lista = glob.glob('fotos/c[1-5].jpg')

b_lista = glob.glob('fotos/b[1-5].jpg')

p_lista = glob.glob('fotos/p[1-5].jpg')

lista_train = c_lista + b_lista + p_lista

En este caso se utilizarán 15 imágenes para entrenamiento, es decir, las imágenes del 1 al 5 en cada clase.

Una vez se tiene claro el set de entrenamiento, es entonces necesario generar un preprocesamiento. En este caso, debido a que la característica principal para poder determinar cada clase es la forma, es que se utilizarán sólo imágenes en blanco y negro y se reescalarán a 100 $\times$ 100 pixeles.

Para ello se utilizan utility functions de tensorflow:

def import_pic(path, size = (100,100)):

return img_to_array(load_img(path,color_mode='grayscale', target_size = (100,100)))

fotos = np.array([import_pic(p) for p in lista_train])

Las imágenes importadas se ven así:

plt.figure(figsize=(10, 10))

for i in range(15):

ax = plt.subplot(3, 5, i + 1)

plt.imshow(fotos[i])

#plt.title(int(labels[i]))

plt.axis("off")

Luego, se define el vector objetivo, en este caso se trata de un vector multiclase, en el que la clase 1 corresponde a autos, la clase 2 será bicicletas y la clase 3 serán aviones.

y_train = np.repeat([[1,0,0],[0,1,0],[0,0,1]], [5,5,5], axis = 0)

Datos de Testing

Es importante que todos los procesos a los que se sometió a los datos de entrenamiento sea replicado de la misma manera para los datos de testing.

lista_test = glob.glob('fotos/c6.jpg')+glob.glob('fotos/b6.jpg')+glob.glob('fotos/p6.jpg')

fotos_test = np.array([import_pic(p) for p in lista_test])

y_test = np.array([[1,0,0],[0,1,0],[0,0,1]])

plt.figure(figsize=(10, 10))

for i in range(3):

ax = plt.subplot(3, 1, i + 1)

plt.imshow(fotos_test[i])

#plt.title(int(labels[i]))

plt.axis("off")

Implementación del Modelo en Tensorflow

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Conv2D, MaxPooling2D, Flatten, Dropout

En este caso se creará una red convolucional similar

a la arquitectura VGG-16.

model = Sequential(name = 'Convolutional_Network')

model.add(Conv2D(name = 'Conv_1', filters = 32, kernel_size=(3,3), activation='relu', input_shape = (100,100,1)))

model.add(Conv2D(name = 'Conv_2', filters=32, kernel_size=(3,3), activation='relu'))

model.add(MaxPooling2D(name = 'Pool1', pool_size = (2,2)))

model.add(Dropout(name = 'Drop_1', rate = 0.25))

model.add(Conv2D(name = 'Conv_3', filters=64, kernel_size=(3,3), activation='relu'))

model.add(Conv2D(name = 'Conv_4', filters = 64, kernel_size = (3,3), activation = 'relu'))

model.add(MaxPooling2D(name = 'Pool2', pool_size = (2,2)))

model.add(Dropout(name = 'Drop_2', rate = 0.25))

model.add(Flatten(name = 'Flat'))

model.add(Dense(name = 'Dense_1', units = 256, activation='relu'))

model.add(Dropout(name = 'Drop_3', rate = 0.25))

model.add(Dense(name = 'Output', units = 3, activation='softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = 'sgd', metrics = 'accuracy')

model.summary()

Model: "Convolutional_Network"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Conv_1 (Conv2D) (None, 98, 98, 32) 320

_________________________________________________________________

Conv_2 (Conv2D) (None, 96, 96, 32) 9248

_________________________________________________________________

Pool1 (MaxPooling2D) (None, 48, 48, 32) 0

_________________________________________________________________

Drop_1 (Dropout) (None, 48, 48, 32) 0

_________________________________________________________________

Conv_3 (Conv2D) (None, 46, 46, 64) 18496

_________________________________________________________________

Conv_4 (Conv2D) (None, 44, 44, 64) 36928

_________________________________________________________________

Pool2 (MaxPooling2D) (None, 22, 22, 64) 0

_________________________________________________________________

Drop_2 (Dropout) (None, 22, 22, 64) 0

_________________________________________________________________

Flat (Flatten) (None, 30976) 0

_________________________________________________________________

Dense_1 (Dense) (None, 256) 7930112

_________________________________________________________________

Drop_3 (Dropout) (None, 256) 0

_________________________________________________________________

Output (Dense) (None, 3) 771

=================================================================

Total params: 7,995,875

Trainable params: 7,995,875

Non-trainable params: 0

_________________________________________________________________

En este caso la red cuenta con dos secciones convolucionales. Cada sección posee 32 y 64 filtros respectivamente y cuenta con dos capas convolucionales, un pooling y un dropout. Luego se pasa por una traansformación Flatten, para aplanar el outout y permitir el traspaso a una capa densa que permitirá la clasificación de cada imágen. En total la red requiere calcular cerca de 8 millones de parámetros.

Es importante recalcar que debido a que se trata de un problema multiclase es que la loss function a utilizar es la categorical crossentropy.

Finalmente se entrena el modelo, escalando el valor de cada pixel por 255, y se entrena por 200 epochs luego de ser mezclado.

%%time

model.fit(fotos/255,y_train, epochs = 200, shuffle = True)

Epoch 1/200

1/1 [==============================] - 0s 2ms/step - loss: 1.1311 - accuracy: 0.2000

Epoch 2/200

1/1 [==============================] - 0s 5ms/step - loss: 1.1029 - accuracy: 0.2667

Epoch 3/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0923 - accuracy: 0.3333

Epoch 4/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0671 - accuracy: 0.5333

Epoch 5/200

1/1 [==============================] - 0s 6ms/step - loss: 1.0561 - accuracy: 0.4000

Epoch 6/200

1/1 [==============================] - 0s 7ms/step - loss: 1.0438 - accuracy: 0.4667

Epoch 7/200

1/1 [==============================] - 0s 6ms/step - loss: 1.1003 - accuracy: 0.2667

Epoch 8/200

1/1 [==============================] - 0s 6ms/step - loss: 1.0388 - accuracy: 0.5333

Epoch 9/200

1/1 [==============================] - 0s 6ms/step - loss: 1.0468 - accuracy: 0.3333

Epoch 10/200

1/1 [==============================] - 0s 6ms/step - loss: 1.0816 - accuracy: 0.3333

Epoch 11/200

1/1 [==============================] - 0s 6ms/step - loss: 1.0738 - accuracy: 0.4000

Epoch 12/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0323 - accuracy: 0.4667

Epoch 13/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0177 - accuracy: 0.4667

Epoch 14/200

1/1 [==============================] - 0s 5ms/step - loss: 0.9835 - accuracy: 0.5333

Epoch 15/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0549 - accuracy: 0.4000

Epoch 16/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0462 - accuracy: 0.4667

Epoch 17/200

1/1 [==============================] - 0s 6ms/step - loss: 0.9802 - accuracy: 0.8000

Epoch 18/200

1/1 [==============================] - 0s 5ms/step - loss: 0.9999 - accuracy: 0.4667

Epoch 19/200

1/1 [==============================] - 0s 5ms/step - loss: 0.9641 - accuracy: 0.5333

Epoch 20/200

1/1 [==============================] - 0s 5ms/step - loss: 0.9304 - accuracy: 0.6000

Epoch 21/200

1/1 [==============================] - 0s 5ms/step - loss: 0.9563 - accuracy: 0.5333

Epoch 22/200

1/1 [==============================] - 0s 5ms/step - loss: 0.8624 - accuracy: 0.6667

Epoch 23/200

1/1 [==============================] - 0s 5ms/step - loss: 0.9080 - accuracy: 0.6000

Epoch 24/200

1/1 [==============================] - 0s 5ms/step - loss: 0.8809 - accuracy: 0.5333

Epoch 25/200

1/1 [==============================] - 0s 6ms/step - loss: 0.8548 - accuracy: 0.6667

Epoch 26/200

1/1 [==============================] - 0s 7ms/step - loss: 0.8035 - accuracy: 0.6667

Epoch 27/200

1/1 [==============================] - 0s 5ms/step - loss: 0.7307 - accuracy: 0.8000

Epoch 28/200

1/1 [==============================] - 0s 6ms/step - loss: 0.7353 - accuracy: 0.7333

Epoch 29/200

1/1 [==============================] - 0s 6ms/step - loss: 0.7121 - accuracy: 0.6667

Epoch 30/200

1/1 [==============================] - 0s 5ms/step - loss: 0.7371 - accuracy: 0.7333

Epoch 31/200

1/1 [==============================] - 0s 6ms/step - loss: 0.6615 - accuracy: 0.6667

Epoch 32/200

1/1 [==============================] - 0s 6ms/step - loss: 0.6892 - accuracy: 0.6667

Epoch 33/200

1/1 [==============================] - 0s 6ms/step - loss: 0.9558 - accuracy: 0.6000

Epoch 34/200

1/1 [==============================] - 0s 6ms/step - loss: 1.1499 - accuracy: 0.6000

Epoch 35/200

1/1 [==============================] - 0s 5ms/step - loss: 0.8030 - accuracy: 0.5333

Epoch 36/200

1/1 [==============================] - 0s 6ms/step - loss: 0.6960 - accuracy: 0.6667

Epoch 37/200

1/1 [==============================] - 0s 5ms/step - loss: 0.6028 - accuracy: 0.8667

Epoch 38/200

1/1 [==============================] - 0s 5ms/step - loss: 0.6218 - accuracy: 0.6667

Epoch 39/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5688 - accuracy: 0.8667

Epoch 40/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5789 - accuracy: 0.6667

Epoch 41/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4852 - accuracy: 0.8000

Epoch 42/200

1/1 [==============================] - 0s 6ms/step - loss: 0.6028 - accuracy: 0.6000

Epoch 43/200

1/1 [==============================] - 0s 6ms/step - loss: 0.8221 - accuracy: 0.6000

Epoch 44/200

1/1 [==============================] - 0s 5ms/step - loss: 1.0544 - accuracy: 0.6000

Epoch 45/200

1/1 [==============================] - 0s 5ms/step - loss: 0.7402 - accuracy: 0.6667

Epoch 46/200

1/1 [==============================] - 0s 5ms/step - loss: 0.6138 - accuracy: 0.6667

Epoch 47/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4751 - accuracy: 0.8000

Epoch 48/200

1/1 [==============================] - 0s 5ms/step - loss: 0.5481 - accuracy: 0.8000

Epoch 49/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4765 - accuracy: 0.7333

Epoch 50/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4316 - accuracy: 0.8667

Epoch 51/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3946 - accuracy: 0.8000

Epoch 52/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5281 - accuracy: 0.6667

Epoch 53/200

1/1 [==============================] - 0s 5ms/step - loss: 0.6698 - accuracy: 0.6667

Epoch 54/200

1/1 [==============================] - 0s 5ms/step - loss: 0.8859 - accuracy: 0.6000

Epoch 55/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5392 - accuracy: 0.6667

Epoch 56/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4646 - accuracy: 0.8667

Epoch 57/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4750 - accuracy: 0.8667

Epoch 58/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4122 - accuracy: 0.9333

Epoch 59/200

1/1 [==============================] - 0s 5ms/step - loss: 0.3788 - accuracy: 0.9333

Epoch 60/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4162 - accuracy: 0.8000

Epoch 61/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4748 - accuracy: 0.8000

Epoch 62/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5568 - accuracy: 0.6667

Epoch 63/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4309 - accuracy: 0.8000

Epoch 64/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4492 - accuracy: 0.7333

Epoch 65/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4759 - accuracy: 0.6667

Epoch 66/200

1/1 [==============================] - 0s 5ms/step - loss: 0.5108 - accuracy: 0.6667

Epoch 67/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5261 - accuracy: 0.6667

Epoch 68/200

1/1 [==============================] - 0s 5ms/step - loss: 0.5230 - accuracy: 0.7333

Epoch 69/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4543 - accuracy: 0.8000

Epoch 70/200

1/1 [==============================] - 0s 5ms/step - loss: 0.3860 - accuracy: 0.7333

Epoch 71/200

1/1 [==============================] - 0s 5ms/step - loss: 0.3412 - accuracy: 0.8000

Epoch 72/200

1/1 [==============================] - 0s 5ms/step - loss: 0.3883 - accuracy: 0.8000

Epoch 73/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4140 - accuracy: 0.8000

Epoch 74/200

1/1 [==============================] - 0s 5ms/step - loss: 0.6120 - accuracy: 0.7333

Epoch 75/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4134 - accuracy: 0.8000

Epoch 76/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3200 - accuracy: 0.9333

Epoch 77/200

1/1 [==============================] - 0s 5ms/step - loss: 0.3044 - accuracy: 0.9333

Epoch 78/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2896 - accuracy: 0.8667

Epoch 79/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2509 - accuracy: 1.0000

Epoch 80/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3629 - accuracy: 0.8000

Epoch 81/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4773 - accuracy: 0.8000

Epoch 82/200

1/1 [==============================] - 0s 5ms/step - loss: 0.6619 - accuracy: 0.7333

Epoch 83/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4416 - accuracy: 0.7333

Epoch 84/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3173 - accuracy: 0.8667

Epoch 85/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2936 - accuracy: 0.8667

Epoch 86/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2473 - accuracy: 1.0000

Epoch 87/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2481 - accuracy: 1.0000

Epoch 88/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3151 - accuracy: 0.8000

Epoch 89/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3888 - accuracy: 0.8000

Epoch 90/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4922 - accuracy: 0.7333

Epoch 91/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4382 - accuracy: 0.7333

Epoch 92/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3617 - accuracy: 0.7333

Epoch 93/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2617 - accuracy: 0.9333

Epoch 94/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2286 - accuracy: 0.9333

Epoch 95/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2101 - accuracy: 1.0000

Epoch 96/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1811 - accuracy: 0.9333

Epoch 97/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1959 - accuracy: 1.0000

Epoch 98/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1525 - accuracy: 1.0000

Epoch 99/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1802 - accuracy: 0.9333

Epoch 100/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1608 - accuracy: 1.0000

Epoch 101/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1246 - accuracy: 1.0000

Epoch 102/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1201 - accuracy: 1.0000

Epoch 103/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0910 - accuracy: 1.0000

Epoch 104/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0957 - accuracy: 0.9333

Epoch 105/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1012 - accuracy: 1.0000

Epoch 106/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3639 - accuracy: 0.8000

Epoch 107/200

1/1 [==============================] - 0s 6ms/step - loss: 1.1047 - accuracy: 0.7333

Epoch 108/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5726 - accuracy: 0.6667

Epoch 109/200

1/1 [==============================] - 0s 5ms/step - loss: 0.3250 - accuracy: 0.8000

Epoch 110/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2097 - accuracy: 1.0000

Epoch 111/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1716 - accuracy: 1.0000

Epoch 112/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1640 - accuracy: 1.0000

Epoch 113/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1720 - accuracy: 1.0000

Epoch 114/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0978 - accuracy: 1.0000

Epoch 115/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1654 - accuracy: 0.9333

Epoch 116/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1490 - accuracy: 0.9333

Epoch 117/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2189 - accuracy: 0.8667

Epoch 118/200

1/1 [==============================] - 0s 5ms/step - loss: 0.4318 - accuracy: 0.7333

Epoch 119/200

1/1 [==============================] - 0s 6ms/step - loss: 0.5190 - accuracy: 0.7333

Epoch 120/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1658 - accuracy: 1.0000

Epoch 121/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1210 - accuracy: 1.0000

Epoch 122/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1245 - accuracy: 1.0000

Epoch 123/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1225 - accuracy: 1.0000

Epoch 124/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1460 - accuracy: 1.0000

Epoch 125/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1592 - accuracy: 0.9333

Epoch 126/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0912 - accuracy: 1.0000

Epoch 127/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0838 - accuracy: 1.0000

Epoch 128/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0996 - accuracy: 1.0000

Epoch 129/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0948 - accuracy: 1.0000

Epoch 130/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0813 - accuracy: 1.0000

Epoch 131/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1437 - accuracy: 0.9333

Epoch 132/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0994 - accuracy: 1.0000

Epoch 133/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1402 - accuracy: 0.9333

Epoch 134/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1606 - accuracy: 0.8667

Epoch 135/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2365 - accuracy: 0.9333

Epoch 136/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2089 - accuracy: 0.8667

Epoch 137/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1223 - accuracy: 0.9333

Epoch 138/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0547 - accuracy: 1.0000

Epoch 139/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0975 - accuracy: 0.9333

Epoch 140/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1617 - accuracy: 0.9333

Epoch 141/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0851 - accuracy: 1.0000

Epoch 142/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0541 - accuracy: 1.0000

Epoch 143/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0496 - accuracy: 1.0000

Epoch 144/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0681 - accuracy: 1.0000

Epoch 145/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0416 - accuracy: 1.0000

Epoch 146/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0324 - accuracy: 1.0000

Epoch 147/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0288 - accuracy: 1.0000

Epoch 148/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0322 - accuracy: 1.0000

Epoch 149/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0443 - accuracy: 1.0000

Epoch 150/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0460 - accuracy: 1.0000

Epoch 151/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0512 - accuracy: 1.0000

Epoch 152/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0512 - accuracy: 1.0000

Epoch 153/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0796 - accuracy: 1.0000

Epoch 154/200

1/1 [==============================] - 0s 5ms/step - loss: 0.1010 - accuracy: 0.9333

Epoch 155/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0719 - accuracy: 1.0000

Epoch 156/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0385 - accuracy: 1.0000

Epoch 157/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0267 - accuracy: 1.0000

Epoch 158/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0248 - accuracy: 1.0000

Epoch 159/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0322 - accuracy: 1.0000

Epoch 160/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0256 - accuracy: 1.0000

Epoch 161/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0241 - accuracy: 1.0000

Epoch 162/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0313 - accuracy: 1.0000

Epoch 163/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0368 - accuracy: 1.0000

Epoch 164/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0247 - accuracy: 1.0000

Epoch 165/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0239 - accuracy: 1.0000

Epoch 166/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0214 - accuracy: 1.0000

Epoch 167/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0275 - accuracy: 1.0000

Epoch 168/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0116 - accuracy: 1.0000

Epoch 169/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0380 - accuracy: 1.0000

Epoch 170/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0183 - accuracy: 1.0000

Epoch 171/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0164 - accuracy: 1.0000

Epoch 172/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0217 - accuracy: 1.0000

Epoch 173/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0081 - accuracy: 1.0000

Epoch 174/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0366 - accuracy: 1.0000

Epoch 175/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0361 - accuracy: 1.0000

Epoch 176/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0168 - accuracy: 1.0000

Epoch 177/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0282 - accuracy: 1.0000

Epoch 178/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0175 - accuracy: 1.0000

Epoch 179/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0064 - accuracy: 1.0000

Epoch 180/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0117 - accuracy: 1.0000

Epoch 181/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0202 - accuracy: 1.0000

Epoch 182/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0252 - accuracy: 1.0000

Epoch 183/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0097 - accuracy: 1.0000

Epoch 184/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0596 - accuracy: 0.9333

Epoch 185/200

1/1 [==============================] - 0s 5ms/step - loss: 0.2113 - accuracy: 0.9333

Epoch 186/200

1/1 [==============================] - 0s 6ms/step - loss: 1.6986 - accuracy: 0.6667

Epoch 187/200

1/1 [==============================] - 0s 6ms/step - loss: 1.2900 - accuracy: 0.6667

Epoch 188/200

1/1 [==============================] - 0s 5ms/step - loss: 0.7224 - accuracy: 0.6667

Epoch 189/200

1/1 [==============================] - 0s 6ms/step - loss: 0.4613 - accuracy: 0.7333

Epoch 190/200

1/1 [==============================] - 0s 6ms/step - loss: 0.3255 - accuracy: 0.8000

Epoch 191/200

1/1 [==============================] - 0s 6ms/step - loss: 0.2301 - accuracy: 0.9333

Epoch 192/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1540 - accuracy: 1.0000

Epoch 193/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1042 - accuracy: 1.0000

Epoch 194/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0850 - accuracy: 1.0000

Epoch 195/200

1/1 [==============================] - 0s 6ms/step - loss: 0.1039 - accuracy: 1.0000

Epoch 196/200

1/1 [==============================] - 0s 5ms/step - loss: 0.0898 - accuracy: 1.0000

Epoch 197/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0558 - accuracy: 1.0000

Epoch 198/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0598 - accuracy: 1.0000

Epoch 199/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0588 - accuracy: 1.0000

Epoch 200/200

1/1 [==============================] - 0s 6ms/step - loss: 0.0430 - accuracy: 1.0000

CPU times: user 3.21 s, sys: 414 ms, total: 3.63 s

Wall time: 3.51 s

En mi caso esto tomó 4 segundos, ya que tengo una RTX 2070 que paraleliza esto muy bien. Correr esto en CPU podría tomar un poquito más.

Inferencia

Luego del proceso de Entrenamiento, se predecirá los resultados utilizando el set de imágenes para testear:

model.predict(fotos_test)

array([[1., 0., 0.],

[0., 1., 0.],

[0., 0., 1.]], dtype=float32)

Es posible ver que la red aprendió rápidamente la tarea encomendada y predijo exitosamente todos los casos de testeo dados, primero un auto, segundo una bicicleta y tercero un avión.

Espero les haya gustado.